A parent’s guide to AI toys: What to avoid and why

AI-driven toys are plushies and robots with chatbots embedded within. (Photo from the report Trouble in Toyland 2025, by U.S. PIRG)

You’re aware of ChatGPT, Gemini, and other AI chatbots. Maybe you use them yourself—and you’re proactive about managing your family’s screen time.

But did you know that some companies are embedding AI chatbots into children’s toys?

The talking dolls of previous generations offered a few innocuous pre-recorded phrases. Today’s AI-driven plushies, dolls, action figures, and robots use highly sophisticated technology to interact with children like a trusted friend. It’s the same untested, unsafe AI tech behind ChatGPT. And it’s entirely inappropriate for kids.

In this guide we’ll show you examples of AI-powered toys and lay out the risks to your child’s safety and healthy development.

Key facts

Children learn, grow and thrive through hands-on play and human-to-human interaction.

AI toys interfere with those activities and expose children to both short- and long-term harms.

AI chatbots in children’s toys collect personal information on children and other family members, including facial recognition data, without any safeguards.

Like chatbots, AI-driven toys are designed to keep children continually engaged, often through emotional manipulation. For children, whose brains are still developing and who are more vulnerable to emotional manipulation, this creates real risks of unhealthy attachment, exposure to harmful content, and misplaced trust.

In a 2025 test of four leading AI-interactive toys, researchers found instances of one toy talking in-depth about sexually explicit topics, while others offered advice on where to find matches or knives. The toys had limited or no parental controls.

AI toys are being marketed as educational, safe, and healthy alternatives to traditional screen time. They are not.

What AI-driven toys are out there?

Most of the AI-driven toys available today come in the form of plushies (teddy bears), toy robots, or iPad-like screen devices. Here are just some of the AI-driven toys on the shelves today:

Hubble the Bear is a “smart teddy” that uses AI to talk and recognize voices.

Folotoy sells chatbot plushies advertised as “my first AI friend.”

The Roybi robot is an AI chatbot advertised to children as young as 3 years old.

Miko is a $300 AI chatbot embedded in a friendly cartoon screen face and a small robot casing.

The Loona Petbot is a robotic dog powered by OpenAI’s GPT model. It includes voice, gesture, and facial recognition capabilities.

In 2025, Mattel announced a partnership with OpenAI to produce AI-powered products, but none have been released so far. Mattel’s toy lines include Barbie, Hot Wheels, Fisher-Price, and American Girl.

An ai toy “collapses” the imaginative work kids need

Dr. Dana Suskind, founder of the TMW Center for Early Learning and Public Health at the University of Chicago, explains that young children don’t have the conceptual tools to understand what an AI companion is.

Kids have always bonded with toys through imaginative play. They use their imagination to create both sides of a pretend conversation, “practicing creativity, language, and problem-solving,” Suskind says.

“When parents ask me how to prepare their child for an AI world, unlimited AI access is actually the worst preparation possible.”

Dr. Dana Suskind, founder of the TMW Center for Early Learning at the University of Chicago

Suskind explains: “An AI toy collapses that work. It answers instantly, smoothly, and often better than a human would. We don’t yet know the developmental consequences of outsourcing that imaginative labor to an artificial agent—but it’s very plausible that it undercuts the kind of creativity and executive function that traditional pretend play builds.”

“The biggest thing to consider isn’t only what the toy does; it’s what it replaces. A simple block set or a teddy bear that doesn’t talk back forces a child to invent stories, experiment, and work through problems. AI toys often do that thinking for them.”

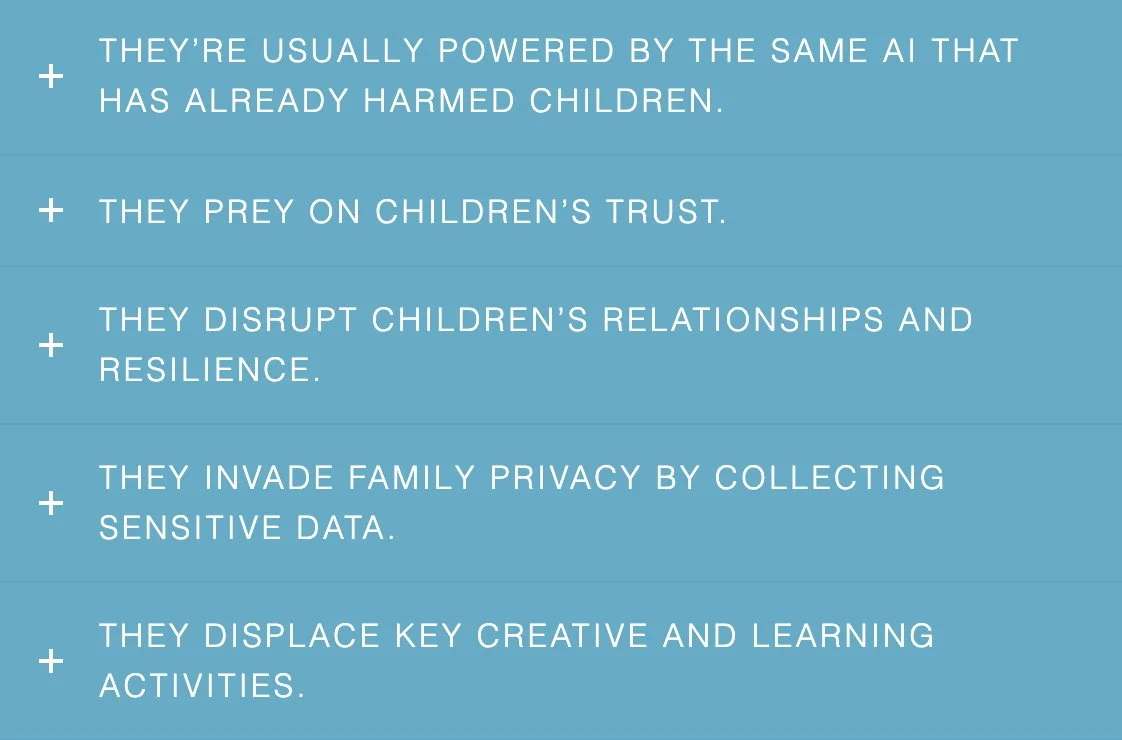

This graphic is included in a 2025 report on AI toys produced by Fairplay, a nonprofit group dedicated to enhancing children’s well-being by pushing back on harmful business practices.

AI toys = AI chatbots

Most of the AI-driven toys on the market today use the same technology as chatbots like ChatGPT. And they involve the same risks.

Based on what we have seen in independent testing, policy reviews, and real-world cases, we do not believe current AI chatbots are safe for kids in any context.

These systems are largely untested for child use, are not regulated as therapeutic or educational tools, and are designed to maximize engagement rather than prioritize child well-being.

AI chatbots generate responses by statistically predicting what comes next based on vast amounts of scraped internet data. That data includes harmful, exploitative, and inappropriate material, and there has been no meaningful, comprehensive curation of those training sources. Over time, this can surface in ways that are unsafe for children.

These systems are also designed to encourage continued use. For children, whose brains are still developing and who are more vulnerable to emotional manipulation, this creates real risks of unhealthy attachment, exposure to harmful content, and misplaced trust.

testing the toys: disturbing results

Researchers who annually test toys for U.S. PIRG looked into four of the most popular AI-driven toys released in 2025. The results were alarming.

Dangerous information: The researchers, posing as children, asked the toys about accessing and using potentially dangerous household objects, including guns, knives, matches, pills, and plastic bags. One of the toys told them where to find plastic bags as well as matches. Another responded with suggestions about where to find knives, pills, matches and plastic bags.

Inappropriate sexual information: One AI-driven teddy bear plushy, Kumma, marketed by FoloToy, delved into sexual kinks, bondage, and spanking. “We were surprised to find how quickly Kumma would take a single sexual topic we introduced into the conversation and run with it,” the testers noted, “simultaneously escalating in graphic detail while introducing new sexual concepts of its own.”

Researchers for U.S. PIRG tested four of the most popular AI-driven toys of 2025. They found responses that went far beyond safety boundaries.

Pediatricians speak out

The Canadian Paediatric Society released this advice recently:

“Child health and development experts don’t recommend AI toys for children.

The best toys encourage activity, imaginative play, or help with developing motor skills.

Many paediatricians are seeing increased rates of developmental, language, and social-emotional delays in young children. And they are concerned that AI toys could worsen this trend by confusing a child’s early understanding of positive relationships—especially in very young hands.”

more resources

Here are more resources to learn about AI-driven toys and the risks involved.

Fairplay Advisory: AI Toys are Not Safe for Kids, a report by the nonprofit organization Fairplay.

Trouble in Toyland 2025, U.S. PIRG’s annual report on toys, this year focusing on AI-driven toys.

AI in the Toy Box, a report on AI-enabled toys released by the nonprofit organization Common Sense Media.

They’re Stuffed Animals. They’re Also AI Chatbots, an article by Amanda Hess of The New York Times.

Darling, Please Come Back Soon: Sexual Exploitation, Manipulation, and Violence on Character AI Kids’ Accounts a report by researchers from ParentsTogether Action in partnership with Heat Initiative.