In early ruling, federal judge defines Character.AI chatbot as product, not speech

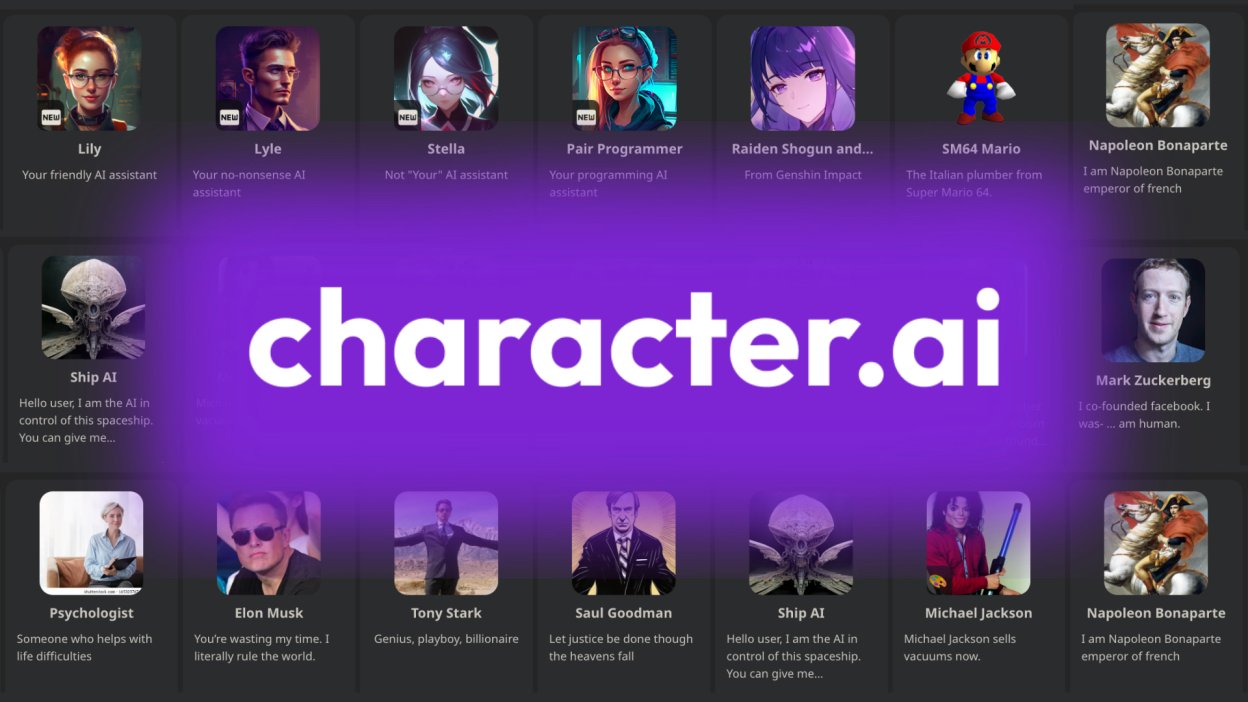

May 21, 2025 — The tech policy world is keeping a close eye on a product liability lawsuit unfolding in federal court in Florida. The case concerns the tragic suicide of 14-year-old Sewell Setzer III, who took his own life after interacting with Character.AI, one of the most popular AI companion chatbots.

Megan Garcia v. Character Technologies Inc., Noam Shazeer, Daniel De Frietas, Google LLC, and Alphabet Inc. is a product liability lawsuit. Garcia, the mother of Sewell Setzer III, alleges product liability, negligence, intentional infliction of emotional distress (IIED), unjust enrichment, wrongful death, and violations of the Florida Deceptive and Unfair Trade Practices Act.

In short, Setzer’s mother is suing those responsible for creating Character.AI, alleging they bear some liability for her son’s death. (For a fuller accounting of the case and its circumstances, see Kevin Roose’s article in The New York Times.)

The defendants initially responded with a motion to dismiss the case on the grounds that the Character.AI chatbot is a service that produces ideas and expressions protected as speech under the First Amendment.

early ruling: companion chatbot is a product

In a ruling released earlier today, U.S. District Court Judge Anne C. Conway allowed most of Garcia’s claims against the AI developers to proceed.

Significantly, Judge Conway ruled that Character.AI is a product for the purposes of product liability claims, and not a service.

The court declined to dismiss the case based on the defendants’ claims of protected speech. That doesn’t necessarily mean Judge Conway decided that the Character.AI interactions with Setzer were or were not protected speech—merely that a protected speech claim is not so clearly valid that the case should not proceed.

As Adi Robertson noted in The Verge shortly after the ruling’s release:

“Character AI and Google (which is closely tied to the chatbot company) argued that the service is akin to talking with a video game non-player character or joining a social network, something that would grant it the expansive legal protections that the First Amendment offers and likely dramatically lower a liability lawsuit’s chances of success. Conway, however, was skeptical.”

the role of ‘duty of care’ in ai technology

At the Transparency Coalition we’re watching this case closely, as it could have profound implications for what we consider is a duty of care that AI developers and deployers owe their consumers. That is, developers and deployers should exercise a duty to ensure users of their products are not harmed by those products. This is nothing more than what we expect from thousands of other industries, companies, and products, from automobiles to Zinfandel.

One of the foundational steps in building that duty of care into the AI industry is establishing that AI products are in fact products.

this week’s ruling allows the case to proceed

The ruling by Judge Conway, which runs to 49 pages, does not decide anything with finality. It merely allows the case to proceed.

While the court dismissed the claims against Alphabet Inc. (Google’s parent company) and dismissed the claim of intentional infliction of emotional distress (IIED), most of the remaining claims were allowed to move forward.

Transparency Coalition Co-founder and COO Jai Jaisimha said this in response to today’s ruling:

“We are encouraged by this important court ruling that is a critical first step in holding companies liable for their lack of care in developing and releasing products. For too long, companies such as Character.AI and Replika have hidden behind the spurious doctrine that they are providing a service and thus cannot be held liable. We thank the Tech Justice Law Project, the Center for Humane Technology, and other participants in this critical effort and look forward to the legal process running its course and in some small way obtaining justice for the bereaved family.”

ruling ‘sends a clear signal’ to ai companies

The Tech Justice Law Project (TJLP) is partnering with the Social Media Victims Law Center to represent Megan Garcia in the case.

In a statement, TJLP said yesterday’s ruling "sends a clear signal to companies developing and deploying LLM-powered products at scale that they cannot evade legal consequences for the real-world harm their products cause, regardless of the technology’s novelty. Crucially, the defendants failed to convince the Court that those harms were a result of constitutionally-protected speech, which will make it harder for companies to argue so in the future, even when their products involve machine-mediated ‘conversations’ with users."

The full court ruling is available below: