New survey finds overwhelming 96% support for protecting kids online

A majority of adults supported age verification measures for AI companion chatbots, while only 4% supported the idea that children do not need any online protections. (Image: Age Assurance Attitudes Among Adults survey.)

Feb. 10, 2026 — In a time of public debate about the appropriate way to manage artificial intelligence and the digital world, Americans are in near-total agreement about one thing: There needs to be a certain level of protection for kids online.

That’s the finding of a new survey released yesterday by Common Sense Media, which found that 96% of adult respondents believe children need protection from certain types of online platforms.

Pornography, gambling, and online purchases were the top concerns, with social media and AI also registering as major concerns. The majority of adults expressed support for age verification mechanisms for all of these platforms.

Although public awareness of AI-driven companion chatbots has only emerged in the past year, researchers found that more than half of adult respondents (56%) expressed support for age verification for these products.

The survey, Age Assurance Attitudes Among Adults, was commissioned by Common Sense Media and carried out by NORC at the University of Chicago, an independent research institution known for its rigorous, objective surveys on social trends and public opinion.

Researchers interviewed 1,096 American adults 18 and older between Oct. 9 and Oct. 13, 2025.

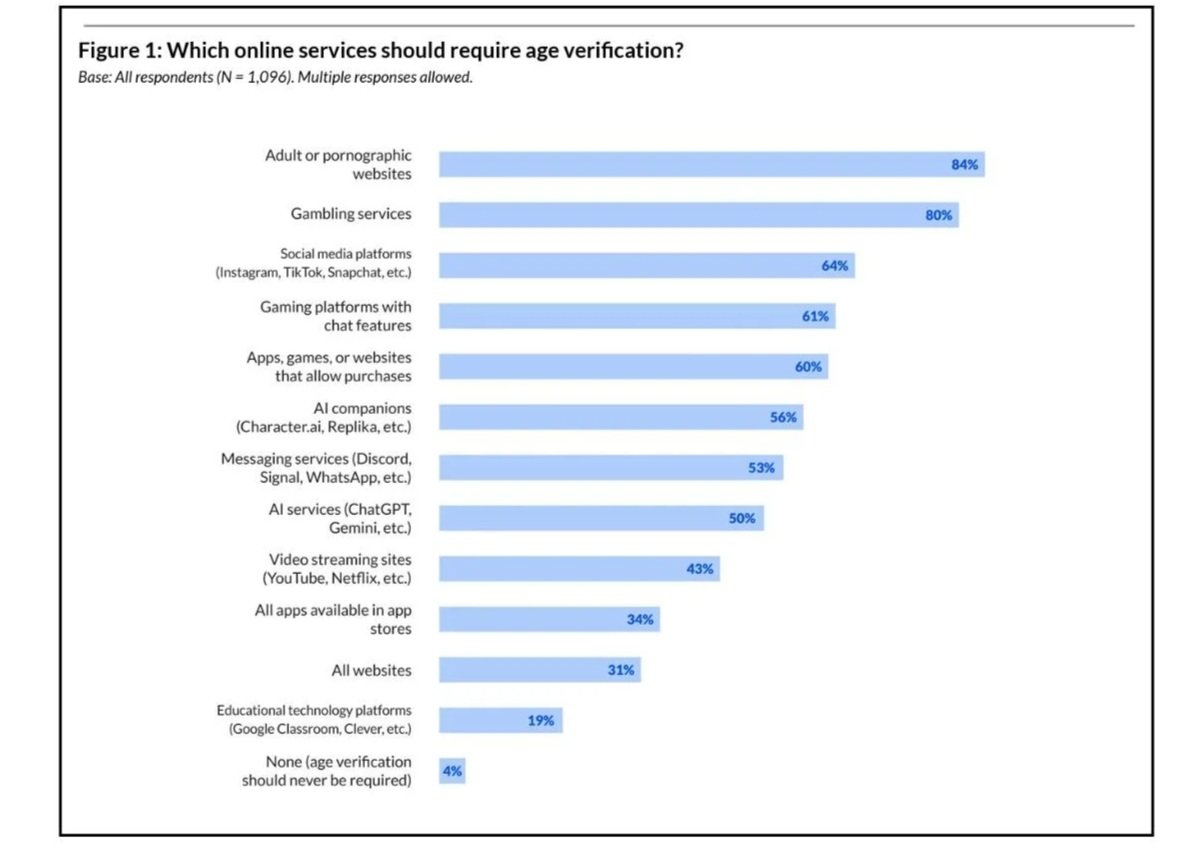

Age verification hugely popular

The survey found that more than 6 in 10 respondents want age verification for social media and gaming platforms. More than half want age verification for AI services and AI companions.

Source: Age Assurance Attitudes Among Adults survey.

When it comes to platforms that young people frequently use, most adults believe that these spaces should require age verification. More than 6 in 10 believe that social media platforms should require age verification (64%), and 61% say the same for gaming platforms with chat features. Eight in 10 respondents believe the same for gambling services.

When it comes to AI, half believe that AI chatbots (like ChatGPT or Gemini) should require age verification (50%), and 56% say the same for AI companions (like those provided by Character AI or Replika).

About one-third of respondents believe that all app store apps (34%) and websites (31%) should require age verification, which suggests that respondents may be nearly equally concerned about websites and apps. And an overwhelming percentage of respondents want age verification for adult or pornographic websites (84%).

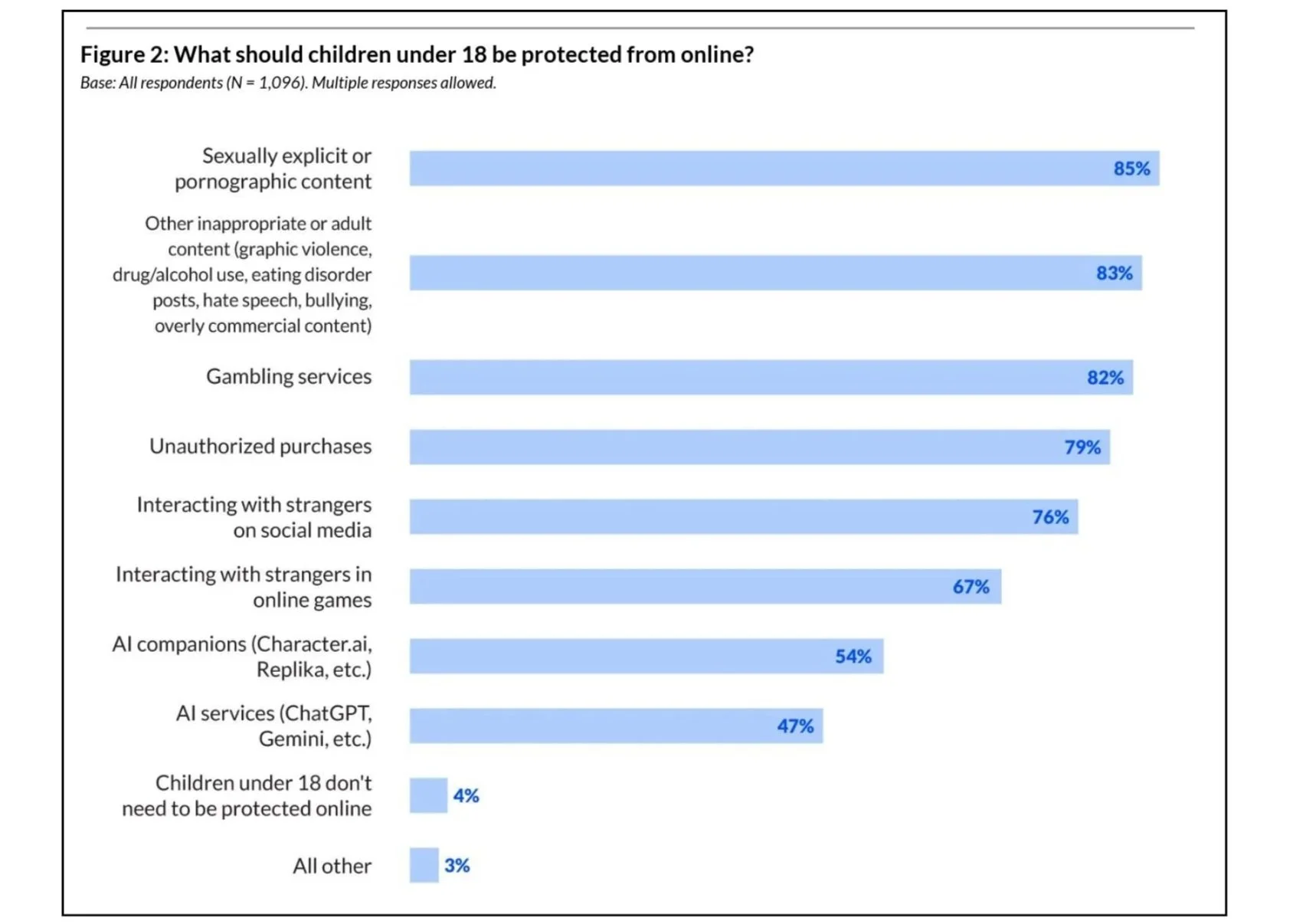

Leading concerns online

When asked what children under 18 should be protected from, respondents overwhelmingly (82%-85%) cited sexually explicit content, graphic violence, hate speech, and gambling.

Fully half of all adults (47%-54%) expressed concern around protecting children from AI chatbots (ChatGPT, Gemini, etc.) and from companion chatbot products (Character AI, Replika).

Only 4% expressed support for the idea that children do not need to be protected online.

Source: Age Assurance Attitudes Among Adults survey.

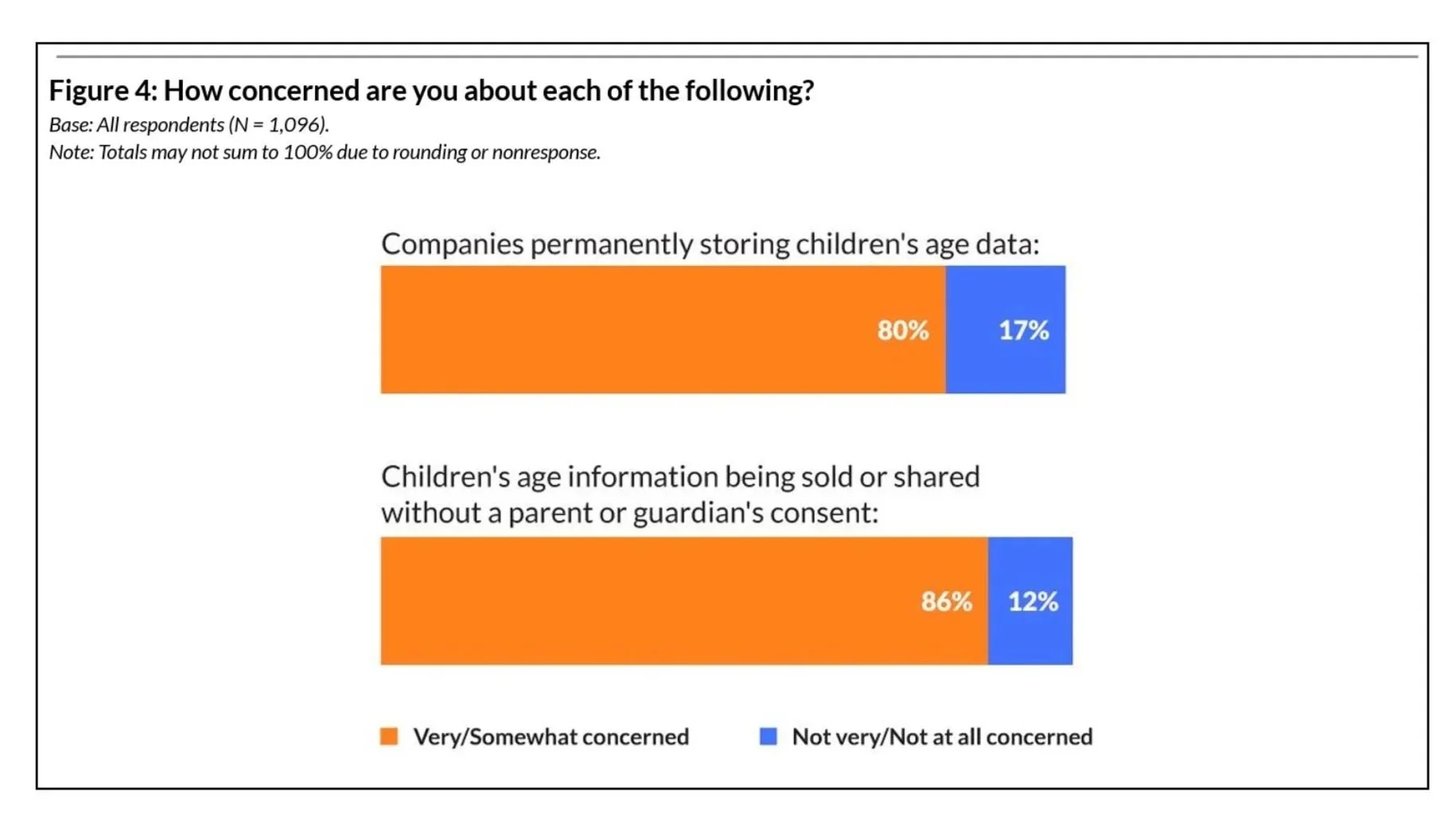

misuse of kids data also a big concern

The survey found that 84% are concerned about companies selling or sharing children's age data without consent, and 80% are concerned about companies permanently storing children's age information.

Source: Age Assurance Attitudes Among Adults survey.

Tools for parents and families

Transparency Coalition’s new resource for parents and families, Parents Playbook for AI, helps parents learn what they need to know to protect kids and teens from the harms of generative AI, and gives them the tools to advocate for solutions.

We combine real-world experience, technical expertise, educational content, and pragmatic advocacy to ensure families aren’t left defenseless in the face of powerful, unaccountable technologies.