Michigan lawmaker introduces AI misuse and ‘critical harm’ measures in Lansing

Michigan State Rep. Sarah Lightner, seen here speaking on the floor of the House in April, introduced two new AI-related bills in late June.

July 14, 2025 — Michigan lawmakers are weighing a recently introduced proposal that would require developers of large AI models to establish safety protocols that will prevent “critical risk,” meaning the serious harm or death of more than 100 people, or more than $100 million in damages.

Rep. Sarah Lightner (R-Springport) introduced the AI Safety and Security Transparency Act (HB 4668) on June 24. It’s now with the Judiciary Committee. Lightner also introduced HB 4667, which would establish new criminal penalties for using AI to commit a crime.

If that seems late in the season for bill introductions, it’s not unusual for Michigan, which hosts the nation’s longest legislative session. In Lansing this year’s calendar opened in early January and is scheduled to close in late December.

Similar to New York’s ‘raise act’ protections

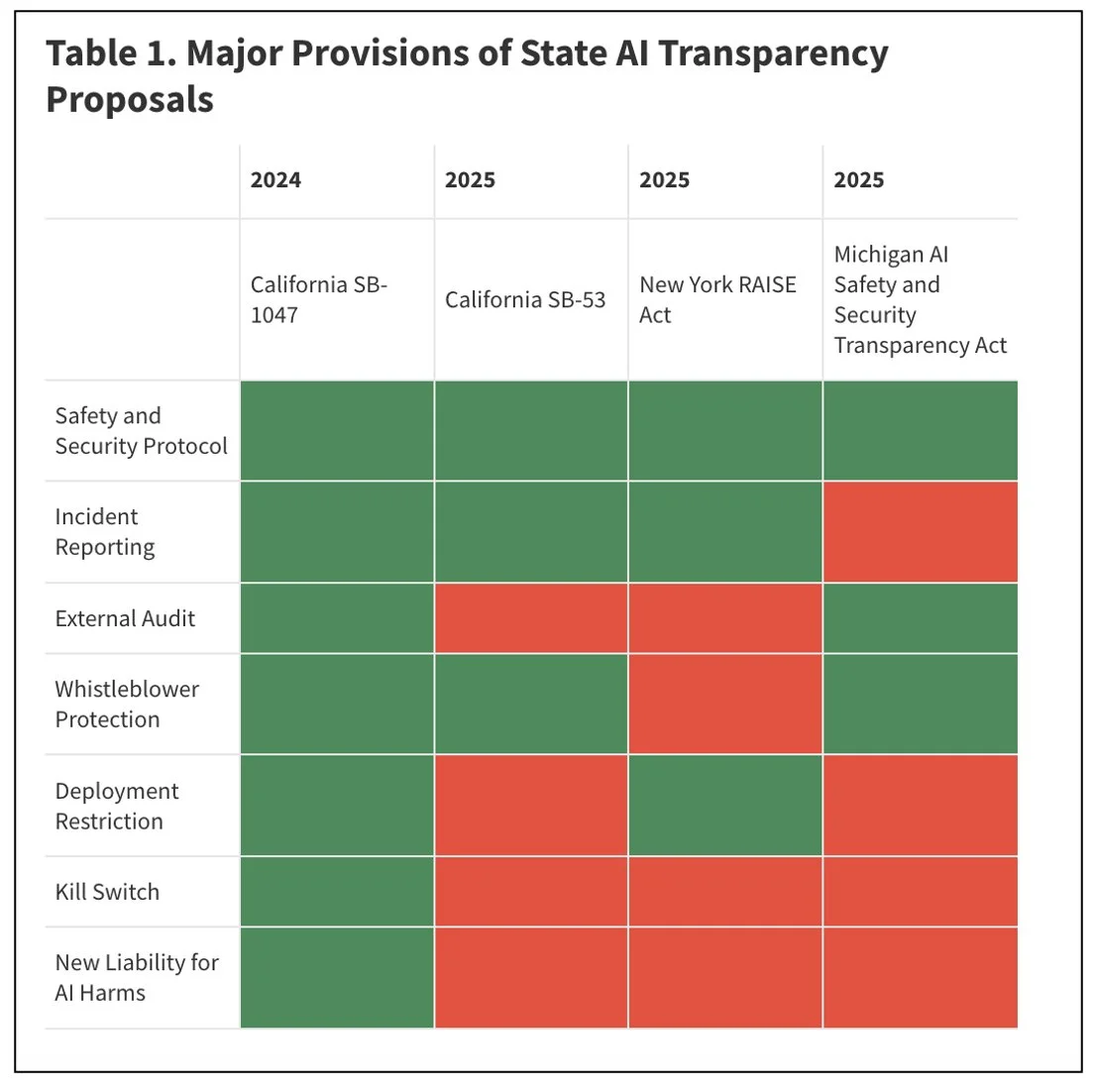

Rep. Lightner’s new bill falls into the category of “critical harm” measures meant to prevent AI developers from unleashing untested AI systems that could cause immense physical or financial harm. Similar bills are currently under consideration in California (SB 53) and New York (the RAISE Act).

Michigan’s HB 4668 shares many similarities with the RAISE Act, which was approved by the New York State legislature and now sits on Gov. Kathy Hochul’s desk.

HB 4668 would apply to only to the largest companies—those that have spent $5 million or more on a single model, and $100 million or more in the prior 12 months to develop one or more AI platforms.

Those companies would have to test their AI models for risk and danger, and enact safeguards to limit potential harm. Large developers would also be required to conduct annual third-party audits.

One modification from the RAISE Act: Michigan’s HB 4668 would provide whistleblower protection to employees of AI developers.

leading ‘critical harm’ ai bills of 2025

The chart below, included in a recent Carnegie Endowment analysis by Alasdair Phillips-Robins and Scott Singer, compares 2025’s three leading “critical harm” AI bills with California SB 1047, the controversial 2024 bill that was approved by the legislature but vetoed by Gov. Gavin Newsom.

second bill: criminal penalties for felonious use of ai

On June 24, Rep. Lightner also introduced HB 4667, which would establish new criminal penalties for using AI to commit a crime.

For example, if AI was used to duplicate someone’s voice, and then a bad actor used that vocal replica to call up the person’s grandmother and scam the elderly woman out of money.

The bill would make it a felony with a mandatory 8-year sentence to develop, possess, or use an AI system with the intent to commit a crime.

Creating an AI platform for others to use for committing crimes would come with a mandatory 4-year prison sentence.

The proposal is similar to Michigan’s felony firearm law, which includes an additional criminal penalty when a person has a gun during the commission of a felony.

“This bill gives prosecutors a new way to capture the full story of what happened and to hold the perpetrator fully accountable, because if we don’t name this kind of exploitation in our law, we leave our most vulnerable residents, like a grandma, in legal limbo,” Lightner told the Michigan Advance.