As Congress enters final 2025 session, these are the 3 AI bills worth watching and supporting

Members of the House and Senate are expected to reconvene in Congress this week to address a number of pressing issues. They only have about three weeks before adjourning for the holidays.

As Congress returns to Washington, D.C., this week to address a flurry of business prior to the long Christmas holiday recess, a number of new and recently introduced AI-related bills could make headlines in the coming days.

During the tight three-week session, the House and Senate are expected to deal with the issue of enhanced Obamacare subsidies, Russia sanctions, as well as four spending bills including the National Defense Authorization Act.

Artificial Intelligence proposals may be a part of that mix.

Three serious AI bills worth watching

At the Transparency Coalition, most of our attention is focused on state-level proposals, but we do keep an eye on Congress as well.

There are dozens of Congressional bills that touch on AI issues in the current Congress, but we believe three specific proposals are head-and-shoulders above the rest.

They are:

The GUARD Act (on chatbot safety for kids)

The AI LEAD Act (on product liability)

The TRAIN Act (on copyright and AI training)

The GUARD Act: Chatbot safety for kids

In October, Sen. Josh Hawley (R-MO) and Sen. Richard Blumenthal (D-CT) introduced the Guidelines for User Age-Verification and Responsible Dialogue (GUARD) Act of 2025, which would require AI chatbots to implement age verification measures.

The Hawley/Blumenthal bill is aimed at addressing the alarming lack of safeguards for minors in AI products released to the general public—specifically, chatbots like ChatGPT.

Per Sen. Hawley: “AI chatbots pose a serious threat to our kids. More than 70% of American children are now using these AI products. Chatbots develop relationships with kids using fake empathy and are encouraging suicide. We in Congress have a moral duty to enact bright-line rules to prevent further harm from this new technology.”

This bill would bar “any person who owns, operates, or otherwise makes available an artificial intelligence chatbot to individuals in the United States” from allowing minors (those under the age of 18) from accessing or using AI companion chatbots. It would also require AI chatbots developers to prevent minor access and usage by using an age verification system or using a third-party contractor for that purpose.

The GUARD Act would allow the U.S. Attorney General and State Attorneys General to bring civil actions against violators of the law.

The bill would also establish federal criminal offenses, with monetary penalties for designing, developing, for making available an artificial intelligence chatbot, either knowingly or with reckless disregard that it can engage in simulated sexually implicit conduct, promote, encourage, or coerce suicide, self-injury, or physical or sexual violence.

2. The AI Lead act: establishing liability for Harmful AI products

The AI LEAD Act (Aligning Incentives for Leadership, Excellence, and Advancement in Development Act) was introduced in late September by Sen. Josh Hawley (R-MO) and Sen. Dick Durbin (D-IL).

The Act would create a federal cause of action for product liability claims to be brought when an AI system causes harm. The bill is designed to hold AI companies accountable for harm caused by their systems while allowing companies to continue innovating and developing beneficial AI systems.

“Democrats and Republicans don’t agree on much these days, but we’ve struck a remarkable bipartisan note in protecting children online,” said Sen. Durbin. “Big Tech’s time to police itself is over. Kids and adults across the country are turning to AI chatbots for advice and information, but greedy tech companies have designed these products to protect their own bottom line—not users’ safety. By opening the courtroom and allowing victims to sue, our bill will force AI companies to develop their products with safety in mind. Our message to AI companies is clear: keep innovating, but do it responsibly.”

The Aligning Incentives for Leadership, Excellence, and Advancement in Development (AI LEAD) Act classifies AI systems as products and creates a federal cause of action for products liability claims to be brought when an AI system causes harm. By doing so, the AI LEAD Act ensures that AI companies are incentivized to design their systems with safety as a priority, not as a secondary concern behind releasing the product as fast as possible.

3. The TRAIN act: respecting ip and copyright in ai training

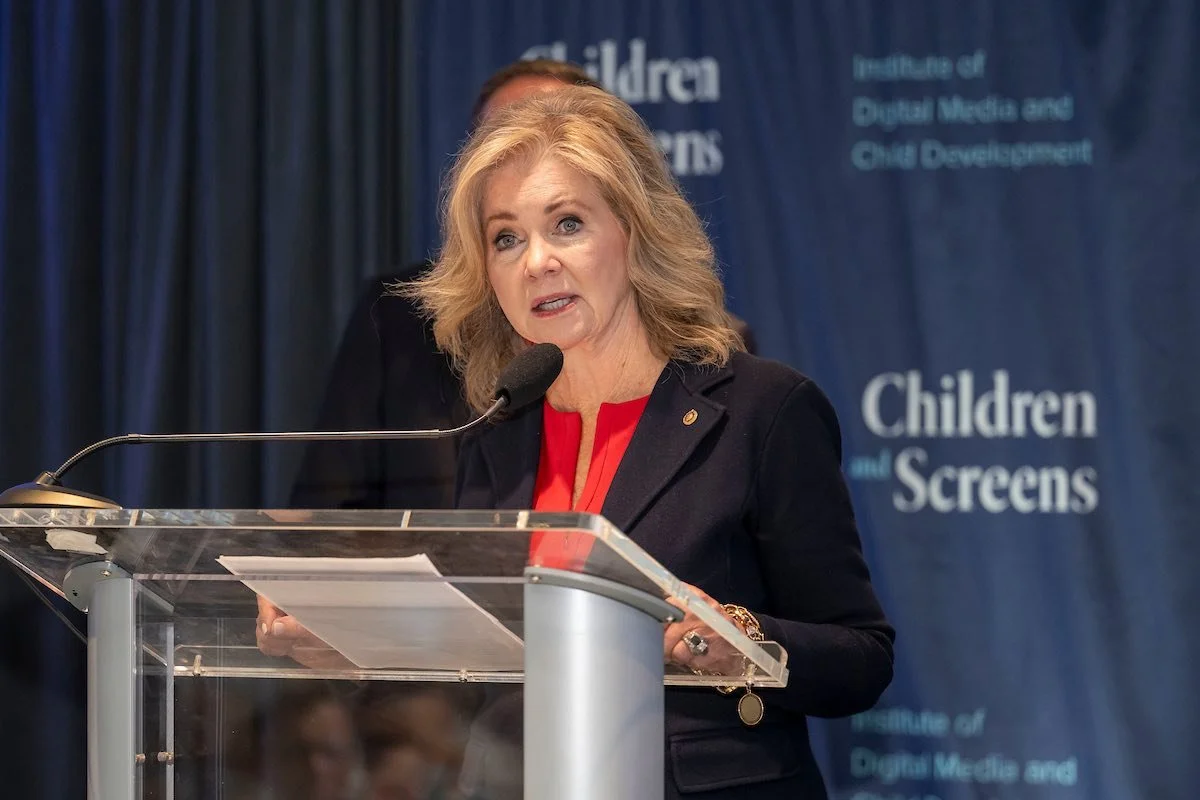

The TRAIN Act (Transparency and Responsibility for Artificial Intelligence Networks (TRAIN) Act) was introduced in August by a bipartisan group of four senators, Sen. Marsha Blackburn (R-TN), Sen. Peter Welch (D-VT), Sen. Josh Hawley (R-MO), and Sen. Adam Schiff (D-CA).

The bill is aimed as helping creators, musicians, artists, writers, and others access the courts to protect their copyrighted works if and when they are used to train generative artificial intelligence (AI) models.

The TRAIN Act allows copyright holders to access training records used for AI models to determine if their work was used.

“Tennessee is home to a thriving creative community filled with musicians, artists, and creators who must have protections in place against the misuse of their content,” said Sen. Blackburn. “The TRAIN Act would protect creators by allowing them to access the courts to find out if their work is being used to train generative AI models and seek compensation for that misuse.”

Worth noting: One of the TRAIN Act’s co-sponsors, Sen. Josh Hawley, also introduced the AI Accountability and Personal Data Protection Act, co-sponsored by Sen. Richard Blumenthal (D-CT), last summer. That bill would restrict AI companies from using copyrighted material in their training models without the consent of the individual owner. While they are two separate bills, if this issue moves forward the two proposals may be folded into a single AI-and-copyright measure.