Durbin, Hawley introduce America’s first federal AI duty of care bill

Sen. Dick Durbin (D-IL), left, and Sen. Josh Hawley (R-MO) have teamed up to introduce the AI LEAD Act, a bipartisan proposal to hold AI companies accountable for the products they manufacture.

Sept. 29, 2025 — In a significant step for AI safety nationwide, two members of the U.S. Senate today introduced the first federal proposal to address product liability responsibilities in the AI industry.

The AI LEAD Act, a bipartisan measure sponsored by Sen. Josh Hawley (R-MO) and Sen. Dick Durbin (D-IL), would classify AI systems as products and create a federal cause of action for products liability claims to be brought when an AI system causes harm.

By doing so, the AI LEAD Act would ensure that AI companies are incentivized to design their systems with safety as a priority, and not as a secondary concern behind deploying the product to the market as quickly as possible.

The full title of the bill is the Aligning Incentives for Leadership, Excellence, and Advancement in Development (AI LEAD) Act.

duty of care: a common sense responsibility

The Hawley-Durbin proposal represents the first federal action on an issue that’s been the top focus of the Transparency Coalition in 2025.

“AI developers have a duty of care that’s no different than any other manufacturer,” says Transparency Coalition CEO Rob Eleveld. “If you sell a product, you have a duty of care to make sure people aren’t harmed by that product.”

“Product liability and consumer protection laws have over 120 years of history in the United States,” Eleveld added. “They are why we don't worry about the brakes in our car failing while driving down the road, why we don't worry about a bottle of Advil being poisoned due to poor manufacturing controls, while we trust a child will be safe in a carseat. Core to product liability law is the concept of duty of care, the expectation that a company will not harm its customers. To think that product liability protections for US consumers should not apply to AI products, whether chatbots or other forms, is absurd. Of course those laws apply to AI products."

AI is a product, not a platform

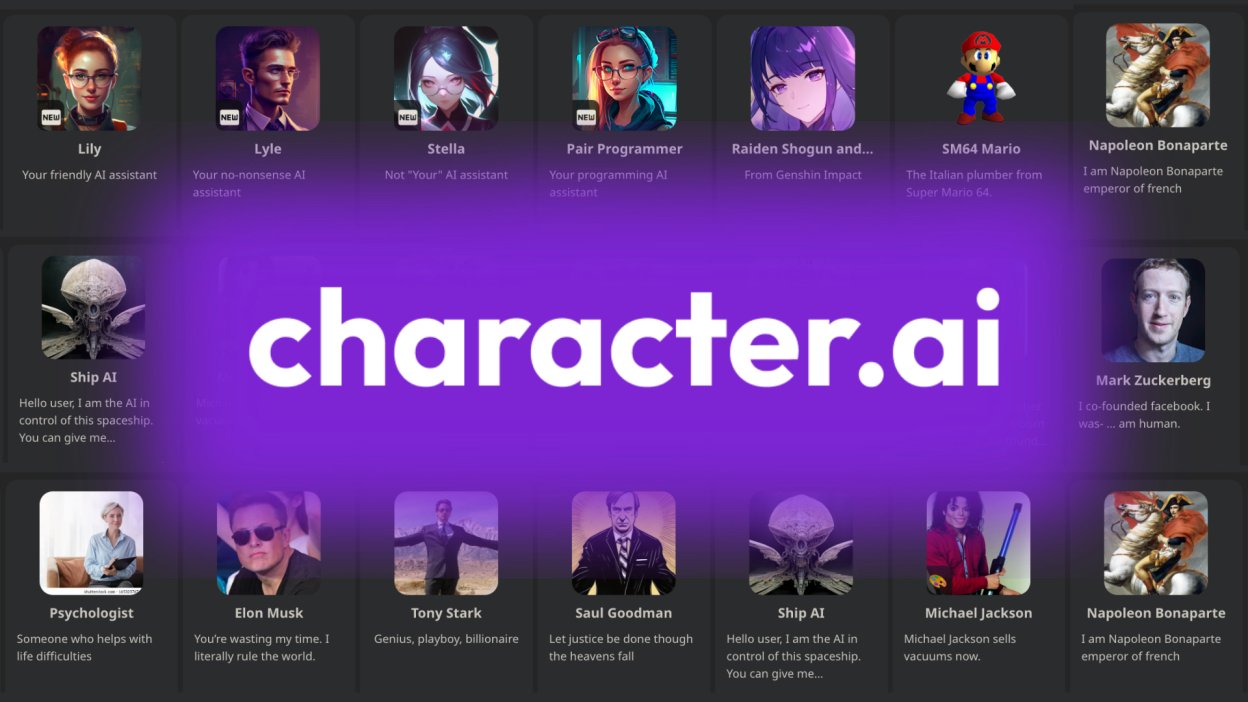

The designation of an AI product like ChatGPT, Gemini, Replika, or CharacterAI, as a product is significant. Up to now, tech companies have produced AI products under the assumption that they will be shielded from liability concerns by Section 230 of the Communications Decency Act (CDA).

Section 230 is a federal law that provides broad immunity to online "interactive computer services" from liability for content posted by their users. The law was enacted to protect Internet platforms from being sued for third-party content, encouraging them to host content and develop the internet without fearing legal action for user posts.

Over the years, tech companies have encouraged a kind of mission creep with Section 230, extending its original meaning and coverage to insulate all tech products from the duty of care expectations society places on all other products, from cars to chemicals.

pushing back: parents and lawmakers

In recent months, that assumed legal shield has been pierced by judges, parents, and lawmakers who are increasingly alarmed by the reckless speed at which AI products are being rolled out.

Two cases in particular have raised the public’s awareness of the price paid when unsafe products are released to an unsuspecting public. In 2024, a CharacterAI chatbot encouraged 14-year-old Sewell Setzer to commit suicide at his home in Florida. Earlier this year, ChatGPT led 16-year-old Adam Raine to a similarly tragic end at his home in California.

A recently filed lawsuit by the Raine family alleges that their son’s death was caused in part by product design defects in OpenAI’s ChatGPT, a product rushed to market over the concerns of OpenAI’s safety team. Earlier this year the judge overseeing a lawsuit instigated by Sewell Setzer’s mother ruled that CharacterAI is in fact a product, and not a platform protected by Section 230.

incentivizing innovation and safe systems

The AI LEAD Act does not prevent companies from innovating and continuing to develop beneficial AI systems. It simply incentivizes the development of safe AI systems by holding companies accountable for their choices.

Sen. Durbin and Sen. Hawley have recently hosted Senate hearings on AI issues, including the dangers of AI chatbots and similarly risky products.

Speaking on the subject of digital harms to children, Durbin said earlier this month: “I learned as Chair of the Senate Judiciary Committee, a few years ago, that this is one of the few issues that unites a very diverse caucus in the Senate Judiciary Committee. The most conservative, the most liberal, and everything in between all voted unanimously to deal with this threat.”

“I believe whether you’re talking about CSAM or you’re talking about AI exploitation, the quickest way to solve the problem and to do it with a real determination, is to give to the victims a day in court,” Durbin added. “Believe me, as a former trial lawyer, that gets their attention in a hurry.”

I’m introducing legislation so parents—and any American—can sue when AI products harm them or their children pic.twitter.com/e3CTKvtJHT

— Josh Hawley (@HawleyMO) September 29, 2025

what’s in the bill

An overview of the bill:

Liability for harm. This bill applies products liability law to AI systems. It enables the Attorney General, state attorneys general, and private actors to file suit to hold AI system developers liable for harms caused by the AI system for defective design, failure to warn, express warranty, and unreasonably dangerous or defective product claims.

In certain circumstances, AI system deployers can also be held liable for harm caused by an AI system. This would occur if an AI system deployer substantially modifies an AI system or intentionally misuses an AI system contrary to its intended use.

The AI LEAD Act does not prescribe certain steps that a company must take when developing their AI systems and allows companies to continue to innovate. However, by creating liability for harm caused by AI systems, developers are incentivized to make sure that their products are not only innovative and competitive but also designed and deployed safely.

Enforcement. A civil action for a violation of the bill may be brought in United States District Court by the Attorney General, a State attorney general, an individual, or a class of individuals.