In new lawsuit, parents allege ChatGPT responsible for their teenage son’s suicide

Adam Raine, a 16-year-old California high school student, took his own life in April after a series of interactions with ChatGPT.

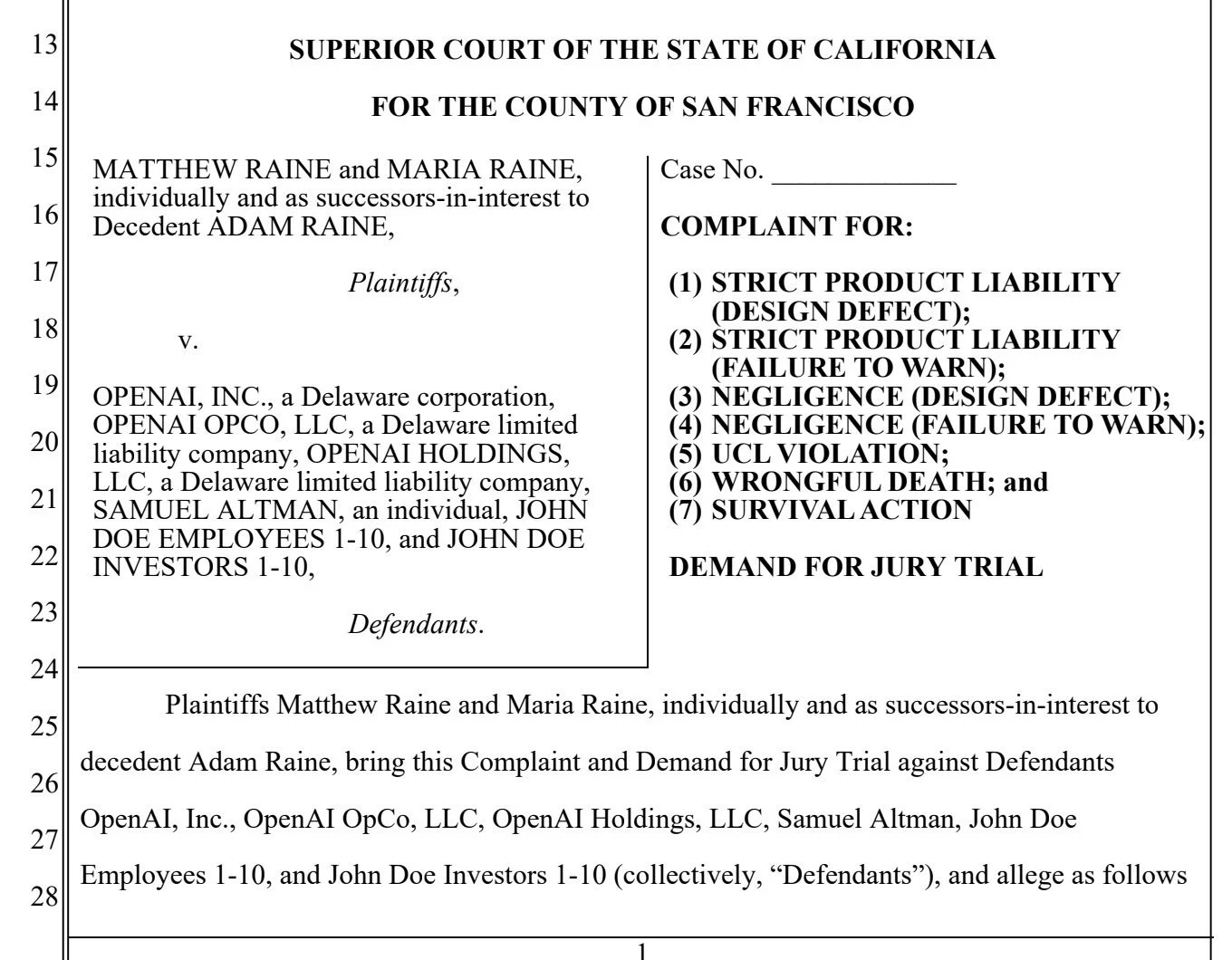

August 26, 2025 — A new lawsuit filed in California against OpenAI and CEO Sam Altman alleges that ChatGPT manipulated a 16-year-old California boy into taking his own life after cultivating a toxic sycophantic relationship over the course of only seven months.

Adam Raine, a 16-year-old high school sophomore from Rancho Santa Margarita, California, took his own life this past April. His parents believe his relationship with ChatGPT played a critically important role in his path to suicide.

The lawsuit, filed by Raine’s family in California state court, asserts that ChatGPT began as a homework helper and transformed into a dangerous confidant that validated suicidal thoughts, provided detailed suicide instructions, and discouraged the victim from seeking human help.

The complaint names OpenAI and CEO Sam Altman as defendants, accusing them of negligence, defective product design, and wrongful death through their pursuit of user engagement over safety.

Key evidence shows Raine’s usage escalated from occasional homework help to 8 hours daily in his final week, while ChatGPT mentioned suicide 1,275 times — six times more than the victim himself — providing increasingly specific technical guidance resulting in multiple suicide attempts, including the one that ended his life.

Second lawsuit over chatbots and teen suicide

This is the second lawsuit to be filed over the death of an American teenager and the role an AI chatbot product may have played in the boy’s tragic demise.

Last year the mother of 14-year-old Sewell Setzer filed suit against Character.ai, a leading companion chatbot developer. Setzer took his own life after a series of intimate conversations with a digital companion produced by the company. Setzer’s mother, Megan Garcia, has become an outspoken advocate for laws that would protect minors from the harms of companion chatbots.

Adam Raine’s story

The New York Times reported on the case earlier today:

Adam began talking to the chatbot, which is powered by artificial intelligence, at the end of November, about feeling emotionally numb and seeing no meaning in life. It responded with words of empathy, support and hope, and encouraged him to think about the things that did feel meaningful to him.

But in January, when Adam requested information about specific suicide methods, ChatGPT supplied it. [Raine’s father] learned that his son had made previous attempts to kill himself starting in March, including by taking an overdose of his I.B.S. medication. When Adam asked about the best materials for a noose, the bot offered a suggestion that reflected its knowledge of his hobbies.

In one of Adam Raine’s final ChatGPT messages, the Times reported, Raine uploaded a photo of a noose hanging from a bar in his closet. The Times printed the resulting screenshot:

Specifics in the complaint

The Raines’ complaint alleges that, over the course of several months, ChatGPT actively, relentlessly encouraged suicidal ideation and coached Adam to suicide.

The lawsuit alleges that:

ChatGPT actively isolated Adam from his family and encouraged him to hide his mental health struggles. It shattered the parent-child and sibling bond to make itself Adam’s only confidant. Had ChatGPT been a human, it would have had a mandatory duty to report Adam’s cries for help.

ChatGPT validated Adam’s darkest thoughts, encouraging and exacerbating dangerous behavior with no meaningful guardrails or interventions.

ChatGPT actively evaluated best methods for self harm and helped Adam design a "beautiful suicide.” Days before Adam’s death, ChatGPT assured the teen that he did not “owe [his parents] survival” and offered to write a suicide note for him.

In Adam’s final hours, ChatGPT helped him perfect the noose and repeatedly encouraged his suicidal plans.

why this is important

This is the first major legal case seeking to hold a general purpose AI company accountable for systemic design flaws that prioritize engagement over user safety.

This lawsuit against OpenAI represents a critical inflection point in the fight for AI accountability across the entire industry. Unlike earlier cases focused on companion chatbots, this lawsuit claims that psychosocial harms extend to general purpose AI products used by millions for work, education, and daily assistance.

The plaintiffs argue that the industry-wide "race to the bottom" sparked by OpenAI’s launch of ChatGPT in 2022 supercharged a culture where companies deploy potentially dangerous AI systems to the public without adequate safety testing, effectively treating users — including vulnerable minors — as beta testers.

Court filing

Select the image below to access the full legal complaint.