New York’s RAISE Act authors give their pitch as Gov. Hochul mulls over AI bill

New York’s RAISE Act would create safety protocols for a handful of the world’s most powerful AI models. The bill was approved by the legislature in June and now awaits Gov. Kathy Hochul’s signature. (Illustration by Alex Shuper for Unsplash+)

July 11, 2025 — On June 12th the New York State legislature passed the Responsible AI Safety and Education Act (RAISE Act, S 6953B), which focuses on ensuring the safety of AI models that cost more than $100 million to train or exceed a certain computational power.

The legislation aims to prevent future AI models from unleashing “critical harm,” defined as the serious injury or death of 100 or more people or at least $1 billion in damages. A key focus is ensuring that AI systems aren’t misused to unleash chemical, biological, radiological or nuclear attacks.

The RAISE Act would require frontier model developers to establish safety and security protocols, implement safeguards, publish a redacted version of those protocols, and allow state officials access to the unredacted protocols upon request.

The measure now sits on the desk of Gov. Kathy Hochul, who must decide whether to sign or veto the bill.

talking over the bill with NYU tech policy leader

Earlier this week the measure’s co-authors, Sen. Andrew Gounardes and Assemblymember Alex Bores, spoke with Scott Babwah Brennen, Director of the Center on Technology Policy at New York University, as part of a webinar on the RAISE Act and its potential effects.

This is an excerpt of that conversation, edited for clarity. A video of the full conversation is available here.

Scott Babwah Brennen: How would you describe the RAISE Act?

Assemblymember Alex Bores: This bill only applies to the largest AI developers. It largely holds them to less than they have already publicly committed to doing, in order to keep everyone in the city, state, country, and world safe from some pretty extreme risks. Those risks include the creation of bioweapons, or other crimes that could be committed. These are risks that AI developers themselves have said are real concerns they are looking out for.

Moderator: Scott Babwah Brennen

Dr. Scott Babwah Brennen is the Director of NYU's Center on Technology Policy and a Policy Research Affiliate at NYU's Center for Social Media and Politics.

‘requiring seatbelts’ on the most powerful ai models

Scott Babwah Brennen: Senator, do you want to add on to that?

Sen. Andrew Gounardes: Yeah, I would describe it using an analogy. We are requiring seatbelts and airbags in the installation of Hummers that can travel as fast as Lamborghinis. That's basically what we are trying to do.

And I think if you ask anyone—particularly ask anyone in the tech world—would they put their kids in a car without a seatbelt today? Absolutely not, because they know that it's a commonsense guardrail to protect against a worst case inevitability, which is a crash.

We're trying to require the same types of guardrails here. We know this technology is here to stay, just as we know the automobile is here to stay. We should have seatbelts in the cars. We should have guardrails around this technology. Simple as that.

RAISE Act Co-author: Andrew Gounardes

Sen. Andrew Gounardes represents the 26th District (Brooklyn) and is Chairman of the Senate Committee on Budget and Revenue.

Scott Babwah Brennen: All right, so let's get into the weeds. I want to talk about what those guardrails specifically are. I know the bill has been in for some amendments. What does the final version of the bill require of frontier model developers?

create and share safety protocols

Sen. Andrew Gounardes: The bill requires a few really basic things.

It requires companies that are developing these frontier models to create and share safety and security protocols before they deploy these models publicly.

It also requires them not to deploy models that have demonstrated unreasonable risk of a critical harm. And we define ‘critical harm’ in the bill at certain thresholds.

It requires them to report safety incidents. So there is some transparency as these models are being trained and tested and developed. Like, [report the fact that] something didn't work right here. Or something didn't meet our expectations here. There's an orange flag of concern. There's a red flag of concern.

And then it requires frontier model developers to complete an annual assessment against those safety plans.

That's really it. That's at its core what this bill does and why we—why I think of it really as guardrails and not really regulation in that respect. I'll let Alex fill in any details I might have missed.

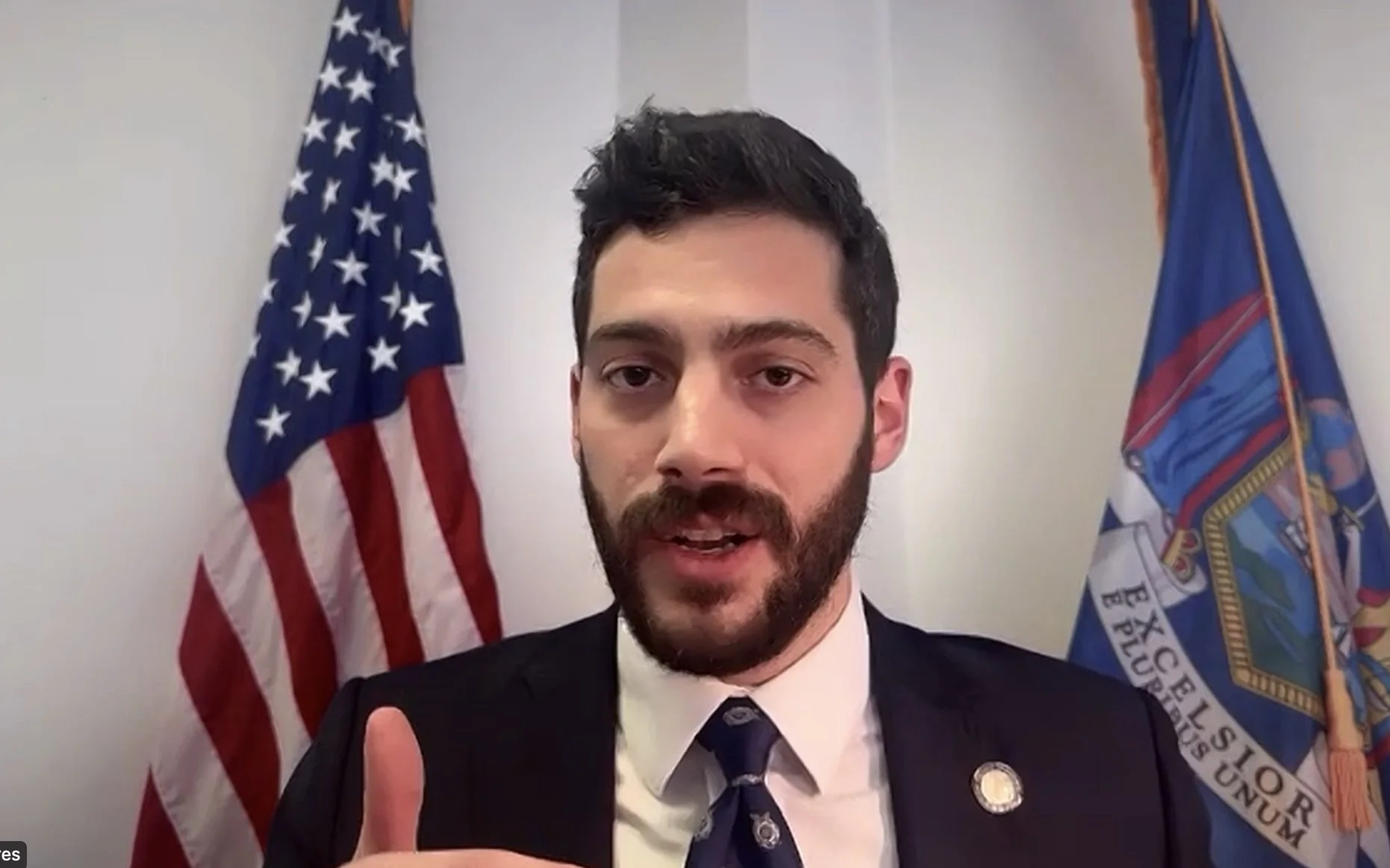

RAISE Act Co-author: Alex Bores

Assemblymember Alex Bores represents the 73rd District (Manhattan).

Asm. Alex Bores: No, all the details were there. I'll be the analogy guy for this round.

This is like the tobacco companies knowing that cigarettes cause cancer, but denying it publicly and still releasing their products. Or the oil companies knowing that their products cause climate change, but denying it publicly and still releasing it.

This is saying, you large tech companies, you design your own safety protocols ahead of time when you're not trying to make your next quarterly goal, when you're not worried about the heat of the race. Define what safety would be. And then if your models fail your own tests, we want to know about it.

‘critical harms’ and what that means

Scott Babwah Brennen: Let’s jump ahead a little bit. You’re focused on critical harms here. What are you talking about, specifically?

Asm. Alex Bores: So a ‘critical harm’ is defined in the bill as causing 100 deaths or a billion dollars in damages by one of two routes, either aiding in the development of a chemical, biological, radiological, or nuclear weapon, or committing a crime under the penal law with no meaningful human interaction.

This is not every risk in AI. It's not the most immediate risks in AI. It's not the most common risks in AI. There's legislation and activity that both the senator and I have sponsored separately and our colleagues have done that focus on all of those really, really pertinent issues.

The RAISE Act is dealing with just the subset of very extreme risks. And I'll add in for critical harm, there are defenses in the bill that the model needs to have been a material cause of that harm, it has to be foreseeable, and there must have been some action you could have taken that would have prevented it.

It is an extremely limited set of risks that we're addressing.

RAISE Act would Codify pledges companies already made

Scott Babwah Brennen: Are there specific examples of frontier model developers engaging in activity that would now be prohibited under the Act?

Asm. Alex Bores: Well, I don't know that there's been enough transparency to allow us to answer that question. But this Act isn’t saying that every company is behaving badly.

What this is saying is that almost every AI company has signed a public document that says they're going to do all of this and more. But [those commitments] are not written into law anywhere. And what the companies have said publicly as well is that if they see their competitors walking back those commitments, that might change the way they behave.

Recently we've seen more AI models being released without the safety cards that were there before, withoutt he third-party audits that were promised.

And so this legislation is responding to that call and [establishing] a baseline so that we ensure the continued good behavior.

Who exactly is covered by the act?

Scott Babwah Brennen: Who exactly is covered by the Act? How do you define ‘frontier model developer’ and what counts as a covered platform here?

Sen. Andrew Gounardes: This bill only applies to a handful of the largest AI labs on the planet. It specifies that they have to have spent $100 million, in total, training frontier models. And so there's only, I don't know, 8, 10, maybe 11 labs right now that approach that threshold. We're talking about a very small number of the largest actors who are operating in this space.

More specifically, the bill only applies to frontier models and those models that are trained using a greater than 10 to the 26th degree computational power with a compute cost of more than $100 million. And large developers—these are generative AI developers that have trained at least one frontier model and have spent over $100 million in the aggregate training these frontier models. There are exemptions for universities that are using these models purely for academic research. That's really who we are talking about.

Scott Babwah Brennan: There's another class of companies that's included here, right? It's not just on the developers of the original model.

Asm. Alex Bores: There are two criteria. There's the large developer, as the Senator said, where you have to spend $100 million in training. That is its own category. If you have not spent $100 million on compute on the final training of your models, this bill does not apply to you.

Second of all, there's the definition of a frontier model, right? You need to have both of those things. And a frontier model can either be 10 to the 26 flops and $100 million in training. Or a model created via the specific process of knowledge distillation, and for which you spend $5 million of that. And that knowledge distillation needs to be created based on a model that is itself a frontier model. Which is taking into account everyone, but is certainly somewhat inspired by what we saw in DeepSeek, where we saw them use knowledge distillation, spend over $5 million off of o1. Now, o1 wasn't a frontier model, so DeepSeek that is in everyone's mind wouldn't be as well. But it's to capture those future aspects.

The really important point here—and something I think some of the bill’s critics have either not understood or willfully not understood—is even if you use knowledge distillation you need to, as a company overall, have spent $100 million on the final training run of compute costs of models. So no startup that's spending $5 million or $10 million or $75 million is going to be covered regardless of how they act in this way.