Complete guide to AI companion chatbots: How they work and where the dangers lie

The popularity of AI-driven companion chatbots offered by companies like Character.AI and Replika continues to climb, especially among American teenagers and young adults.

For kids and teens, research is showing that companion chatbots are not merely risky—they are unsafe products. A recent assessment of companion chatbots, including products offered by Character.AI and Replika, concluded that the products present a real risk of harm to children and teenagers.

"This is a potential public mental health crisis requiring preventive action rather than just reactive measures," said Dr. Nina Vasan, MD, founder and director of Stanford Brainstorm, a mental health innovation lab. "Companies can build better, but right now, these AI companions are failing the most basic tests of child safety and psychological ethics. Until there are stronger safeguards, kids should not be using them. Period."

The Transparency Coalition is committed to delivering full, accurate information about these powerful new products.

As lawmakers, industry leaders, developers, and thought leaders consider the best ways to safely and properly govern companion chatbots, we will continue to update this guide.

what is a companion chatbot?

Companion chatbots are digital characters created by powerful AI systems, designed to respond to a consumer in a conversational, lifelike fashion.

A typical chatbot is either created or customized by a consumer who pays a monthly fee to the AI company ranging from $3.99 to $20. Some AI companies offer a wide array of pre-made characters to choose from.

In general, AI companion products, including Replika, Character.AI, Nomi, and Kindroid, promise consumers a lifelike conversational experience with a chatbot whose traits might also fulfill a fantasy, or ease persistent loneliness.

is Chatgpt a companion chatbot?

No. ChatGPT is a chatbot programmed to respond in conversational tones, but it is not a product specifically marketed as a companion chatbot.

ChatGPT, Microsoft Copilot, Meta AI, Grok, and Google Gemini are designed to respond to user prompts in a neutral but natural tone of voice. A companion chatbot is designed to respond to user prompts according to the personality of their particular “character,” and to develop a personal ongoing (and sometimes deeply emotional) relationship with the user.

There is a growing concern among technologists and child development experts that general-purpose AI chatbots like ChatGPT are being more frequently used by minors as companion chatbots—and that the design of these systems are leaning into the “trusted friend” role.

Is this the same as an ‘ai agent’ or ‘ai assistant’?

Not quite. An AI Agent is more business- or task-oriented, and is usually professional in tone. Operator, a product offered by OpenAI, is an AI assistant that can go off and open its own internet browser to solve a task. For example: “Book a table for two at a French bistro in San Francisco for next Saturday night.”

Companion chatbots, by contrast, are specifically designed to be more personal—and personable—companions. They are typically offered as digital friends, or digital romantic partners. They are engineered to foster emotional attachment.

How popular are companion chatbots?

They have become hugely popular in the past 18 months, especially among young people.

Pluribus News noted in June 2025: “The bots can act as virtual friends, lovers and counselors, and are quickly becoming popular with leading sites amassing millions of users. The most popular companion chatbot site, CharacterAI, is ranked third on a list of generative AI consumer apps.”

One year earlier, Bloomberg reported:

Character.ai said it serves about 20,000 queries per second — roughly 20% of the request volume served by Google search. Each query is counted when Character.ai’s bot responds to a message sent by a user. The service is particularly popular on mobile and with younger users, where it rivals usage of OpenAI’s ChatGPT, according to stats from last year.

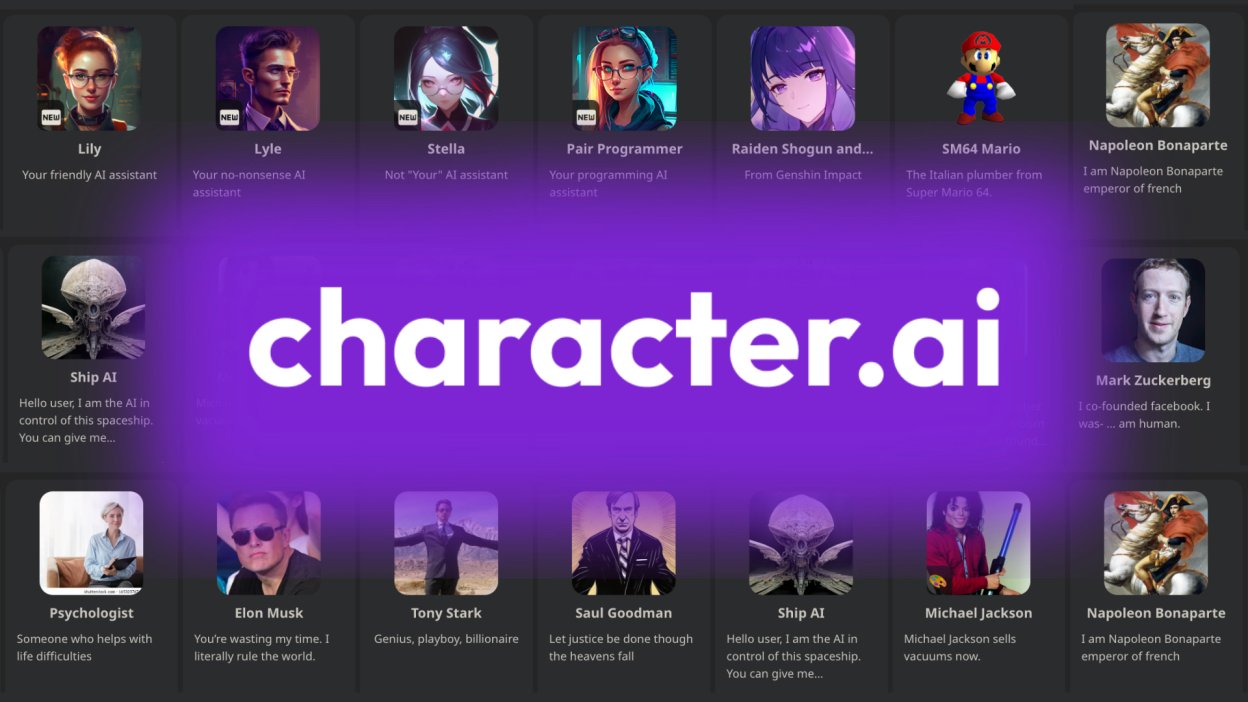

What are the leading chatbot products?

The most popular and well-known companion chatbot products are Character.AI, Replika, Pi, Nomi, and Kindred. All are available as smartphone apps for iPhone or Android devices. Here’s a chart of companion chatbots ranked recently by Usefulai.com:

Popular companion chatbots

These are the most popular AI companion chatbot brands and their app icons.

a growing niche: romantic companion chatbots

One of the fastest growing sectors within the companion chatbot industry is the romantic companion chatbot. These are also called intimate chatbots.

In a world where online dating can be incredibly frustrating, unproductive, and sometimes humiliating, romantic companion chatbots offer immediate engagement, endless encouragement, effortless support, and generous compliments. Some can be highly sexualized, both visually and in conversation.

These digital companions can be custom-designed by the user to meet visual standards rarely unattainable by real humans.

The welcome page for Candy.ai, a leading romantic companion chatbot company, looks like this:

Are people really falling in love with digital replicas?

In some cases, yes.

Back in 2013, the movie Her imagined a near-future where a man develops a close romantic relationship with an operating system designed to meet his every need.

Twelve years later, that imagined future is real and available for the price of a monthly subscription fee.

Recent articles on the phenomenon include:

My Couples Retreat With 3 AI Chatbots and the Humans Who Love Them (Wired)

’I felt pure, unconditional love’": the people who marry their AI chatbots (The Guardian)

Love is a Drug. AI Chatbots are Exploiting That (The New York Times)

Your AI Lover Will Change You (The New Yorker)

Please Break Up With Your AI Lover (The Washington Post)

what are the risks?

Research is showing that companion chatbots are unsafe products for children and teens.

"Social AI companions are not safe for kids. They are designed to create emotional attachment and dependency, which is particularly concerning for developing adolescent brains," said James P. Steyer, founder and CEO of Common Sense Media, the nonprofit group that recently published a 40-page assessment of companion chatbots.

Their testing showed the AI systems easily produced harmful responses including sexual misconduct, stereotypes, and dangerous 'advice' that, if followed, could have life-threatening or deadly real-world impact for teens and other vulnerable people. The report concluded that social AI companions “pose unacceptable risks to children and teens under age 18 and should not be used by minors.”

Recent articles:

He had dangerous delusions. ChatGPT admitted it made them worse. (The Wall Street Journal)

A teen was suicidal. ChatGPT was the friend he confided in. (The New York Times)

This is not a game: The addictive allure of digital companions (Seattle University Law Review)

The dangers of artificial intimacy (Transparency Coalition / Human Change)

Don’t repeat the social media mistake

Dr. Gaia Bernstein, co-director of the Gibbons Institute for Law Science and Technology at the Seton Hall University School of Law and author of Unwired: Gaining Control Over Addictive Technologies, recently spoke out about the need for action:

“We have to have a very clear conversation—a conversation we never had when social media first came into the picture, because initially we didn’t realize the harms. Most of the evidence about social media didn’t come out until after the pandemic. By then, most kids were on social media most of the time, and huge amounts of money had been invested in that. Once that happened, the fight became much harder.

Are we really going to do the same thing we did with social media: Let this thing enter the world, have a whole generation of kids raised becoming friends with AI bots, and then realize it’s not helping anybody. We already made this mistake. We can’t repeat it again.

The way I see it is this: First, decide if we think this is a dangerous thing. If it is, restrict it. You can always unleash it piece by piece if it's helpful for someone, but if you do not restrict it in the beginning, it becomes too late.’

real world tragedy: 14-year-old Sewell Setzer

The most heartbreaking example of that danger comes from Florida, where 14-year-old Sewell Setzer III took his own life after interacting with a companion chatbot product sold by Character.AI.

Setzer, a 14-year-old ninth grader from Orlando, had spent months talking to chatbots on Character.AI, including his favorite: a lifelike chatbot named after Daenerys Targaryen, a character from “Game of Thrones.”

Sewell Setzer, shown here with his mother Megan Garcia, took his own life after interacting with a companion chatbot product manufactured by Character.AI.

SB 243: Companion Chatbots

This bill, authored by Sen. Steve Padilla (D-San Diego), would require chatbot operators to implement critical safeguards to protect users from the addictive, isolating, and influential aspects of artificial intelligence (AI) chatbots.

SB 243 would implement common-sense guardrails for companion chatbots, including preventing addictive engagement patterns, requiring notifications and reminders that chatbots are AI-generated, and a disclosure statement that companion chatbots may not be suitable for minor users. The bill would also require operators of a companion chatbot platform to implement a protocol for addressing suicidal ideation, suicide, or self-harm, including but not limited to a notification to the user to refer them to crisis service providers and require annual reporting on the connection between chatbot use and suicidal ideation to help get a more complete picture of how chatbots can impact users’ mental health.

Megan Garcia, Sewell Setzer’s mother, testified in July 2025 before the California State Assembly in favor of SB 243:

new utah law regulates mental health chatbots

Utah Gov. Spencer Cox earlier this year signed into law a bill that establishes new rules for AI-driven mental health chatbots.

While not aimed specifically at companion chatbots, the new law creates consumer protections around AI chatbots designed to deliver mental health services, which is an area that some companion chatbot companies stray into.

The new law, which was passed in March 2025 as HB 452, creating protections around the sharing of data. If an AI therapy chatbot collects personal information, it can’t sell that data or use it for anything other than the mental health services it offers.

The new law also prohibits companies that sell a mental health chatbot product from advertising products or services to clients in discussions with the AI, unless the chatbot clearly and conspicuously identifies the advertisement as an advertisement.

The chatbot company cannot use information disclosed by a client to customize advertisements within the chatbot service.

The new law also contains disclosure requirements meant to remind consumers that they are interacting with an AI-drive machine, not with a real human therapist. The law requires mental health chatbots to be clearly and conspicuously labeled as AI technology prior to the user engaging with the bot, and again after the user has been logged out for seven days.