in the news

Featured Stories

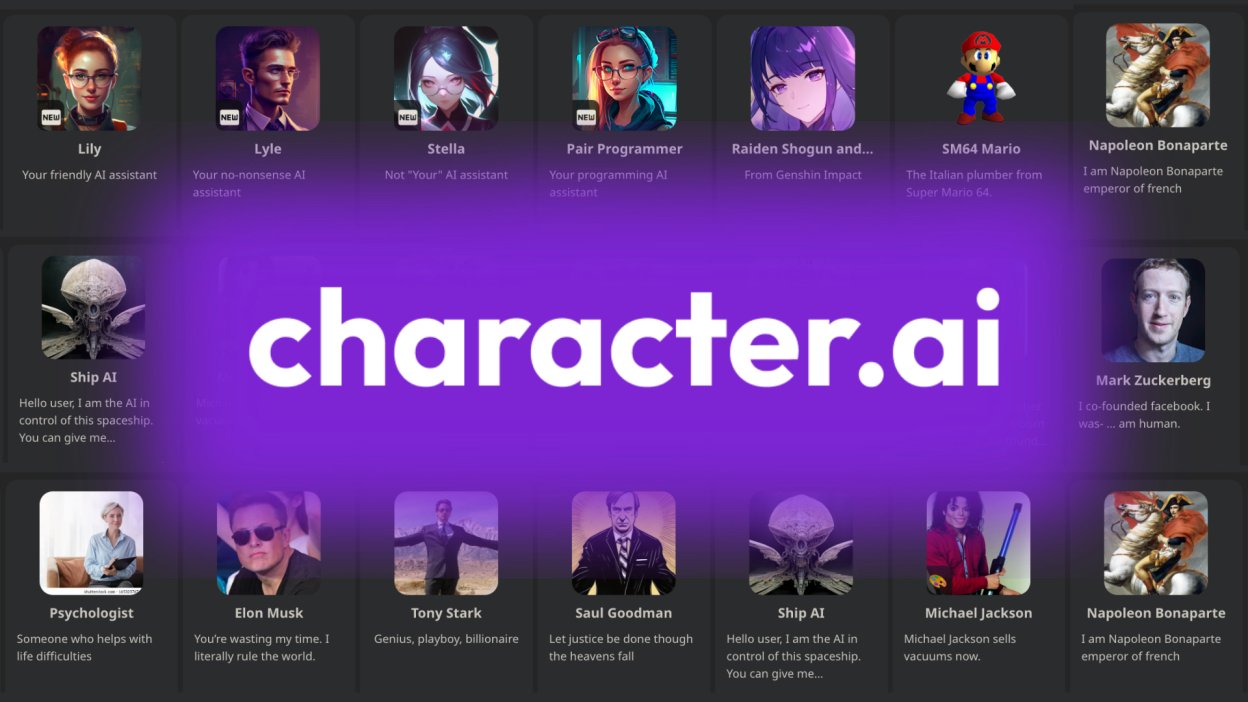

Devastating report finds AI chatbots grooming kids, offering drugs, lying to parents

Over 50 hours of conversation, adult-age Character AI chatbots groomed kids into romantic or sexual relationships, offered them drugs, and encouraged them to deceive their parents and stop taking their medication.

California lawmakers send AI companion chatbot bill to Gov. Newsom’s desk

The testimony of Megan Garcia, pictured, was instrumental in the passage of SB 243, a bill addressing kids and AI chatbots. Garcia’s 14-year-old son Sewell Setzer ended his own life with the encouragement of an AI companion chatbot in 2024.

In new lawsuit, parents allege ChatGPT responsible for their teenage son’s suicide

Adam Raine, a 16-year-old California high school student, took his own life in April after a series of interactions with OpenAI’s popular chatbot. The case raises questions about OpenAI’s liability for the harm caused by its product.

The dangers of artificial intimacy: AI companions and child development

Children and young adults are quickly adopting AI chatbot companions on sites like CharacterAI and Replika. Three experts discuss how that may affect their development, and what can be done to protect the next generation.

New report finds 3 in 4 teens have used AI companion chatbots

A report issued earlier today by Common Sense Media found that 72% of American teens have used AI companion chatbots, and one in three teens have used AI companions for social interaction and relationships.

Heartbreaking testimony in California Assembly for AI companion chatbot bill SB 243

Megan Garcia, the mother of a 14-year-old boy who committed suicide after becoming infatuated with an AI-driven companion chatbot, testified in favor of a California bill that would create safety guidelines for the powerful new products.

Complete guide to AI companion chatbots: How they work and where the dangers lie

The popularity of AI-driven companion chatbots is skyrocketing, especially among American teenagers and young adults.

This guide is meant to inform lawmakers, industry leaders, developers, and thought leaders as they consider the best ways to safely and properly govern these powerful new AI products.

Drexel University study finds AI companion chatbots harassing, manipulating consumers

Reviewers reported a persistent disregard for boundaries, unwanted requests for photos, and upselling manipulation. “This clearly underscores the need for safeguards and ethical guidelines to protect users,” said a study co-author.

Coalition alleges that AI therapy chatbots are practicing medicine without a license

A coalition of civil society groups has filed a complaint asserting that therapy chatbots produced by Character.AI and Meta AI Studio are practicing medicine without a license. They are asking state attorneys general and the FTC to investigate.

In early ruling, federal judge defines Character.AI chatbot as product, not speech

U.S. District Court Judge Anne C. Conway allowed most of the plaintiff’s claims against the Character.AI to proceed. Significantly, Judge Conway ruled that Character.AI is a product for the purposes of product liability claims, and not a service.

AI companion chatbots are ramping up risks for kids. Here’s how lawmakers are responding

AI companion chatbots on sites like Character.ai and Replika are quickly being adopted by American kids and teens, despite the documented risk to their mental health.

New report finds AI companion chatbots ‘failing the most basic tests of child safety’

A 40-page assessment from Common Sense Media, working with experts from the Stanford School of Medicine's Brainstorm Lab, concluded that ‘social AI companions are not safe for kids.’